Agentic Infrastructure in Practice

Enterprise AI conversations still over-index on models, focusing on benchmarks, parameter counts, feature comparisons, and release cycles. Yet production environments rarely fail because a model lacks capability. They often fail because workflow architecture was never designed to absorb autonomy in the first place.

When digital production scales without structural discipline, governance erodes quietly. When governance tightens reactively, innovation stalls. Both outcomes stem from the same architectural flaw: layering AI onto systems that were not built for persistent context, background execution, and policy-bound automation.

The competitive advantage is not in the model – it is in the pipeline.

The institutions that succeed will not be those experimenting most aggressively. They will be those that design structured agentic systems capable of increasing throughput while preserving accountability. In that environment, the competitive advantage is not the model itself but the production pipeline that governs how intelligence moves through the organization.

The question is not whether to use AI. The question is whether your infrastructure is designed for autonomy under constraint.

Metaphor: The Factory Floor, Modernized.

Think of a legacy archive or production system as a dormant factory. The machinery exists. The materials are valuable. The workforce understands the craft. But everything runs manually, station by station. Modernization does not mean replacing the factory. It means upgrading the control system.

CASE STUDY: Sand Soft Digital Arching at Scale

In the SandSoft case study, the transformation began with physical ingestion and structured digitization. Assets were scanned, tagged, layered into archival and working formats, and indexed with AI-assisted metadata.

That was not digitization for convenience. It was input normalization. Once the inputs were stable, LoRA-based model adaptation was introduced. Lightweight, domain-specific training anchored entirely in owned source material .

Then came the critical layer: agentic governance.

Watermarking at creation. Embedded licensing metadata. Monitoring agents scanning for IP misuse. Automated compliance reporting. This is not AI as a creative distraction. It is AI as a controlled production subsystem.

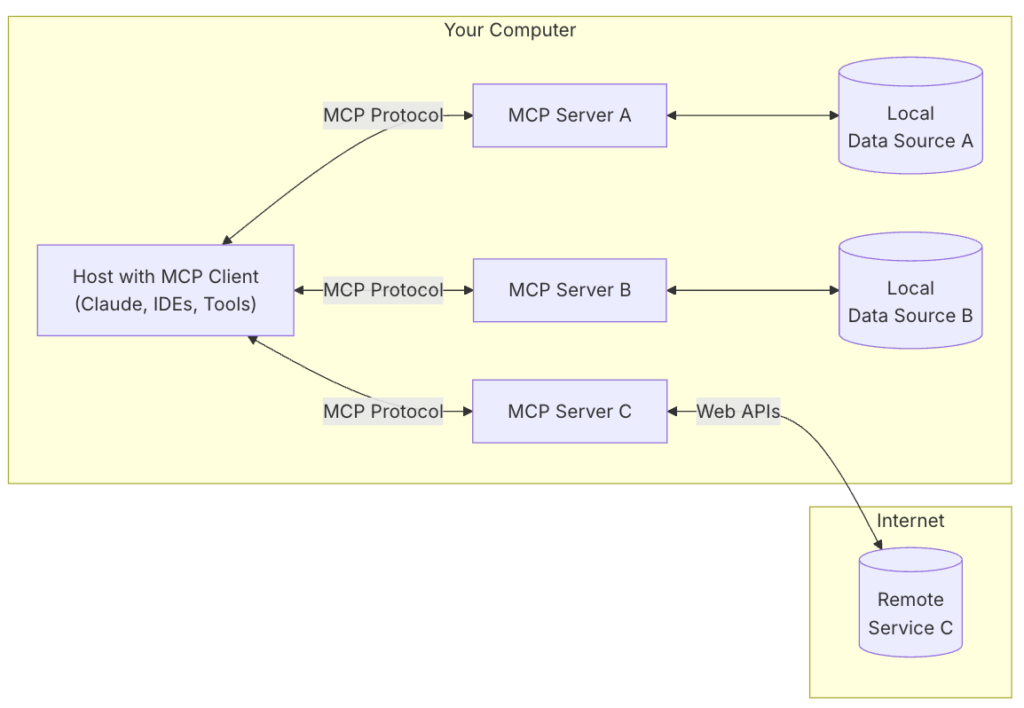

Each agent has a bounded mandate. No single node controls the entire flow. Every output is logged. Escalation paths are predefined. Like a well-run enterprise desk, authority is layered. Execution is distributed. Accountability remains human.

That is the difference between experimentation and infrastructure.

Why This Matters to Senior Leadership

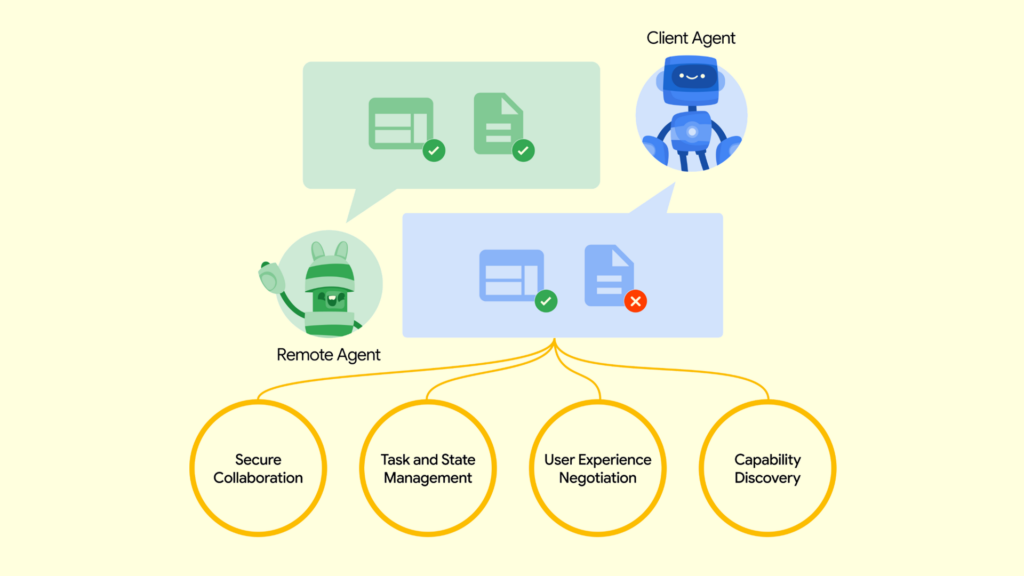

For CIOs, operating partners, and infrastructure decision-makers, the core risk is not technical failure but unmanaged velocity. Agentic systems accelerate output, and if governance architecture does not scale in parallel, exposure compounds quietly and often invisibly.

A disciplined production pipeline does three things:

- Reduces manual drag without decentralizing control

- Creates persistent institutional memory through logged workflows

- Converts AI from cost center experiment to auditable operational asset

In regulated or credibility-driven environments, autonomy without traceability creates risk. When agentic systems are deliberately structured, staged in maturity, and governed by explicit policy constraints, they shift from liability to resilience infrastructure. The distinction is not cosmetic. It is structural. This is not about layering AI tools onto existing workflows. It is about redesigning how work moves through the institution – with autonomy embedded inside accountability rather than operating outside it.

For leaders responsible for credibility, the most significant risk of agentic AI is not technical failure per se but unmanaged success – systems that move faster than oversight can absorb can create risk exposure that quietly accumulates. A recent McKinsey analysis on agentic AI warns that AI initiatives can proliferate rapidly without adequate governance structures, making it difficult to manage risk unless oversight frameworks are deliberately redesigned for autonomous systems. Similarly, enterprise practitioners have cautioned that rapid deployment without structural guardrails can create a shadow governance problem, where velocity outpaces policy enforcement and exposure compounds before leadership has visibility.

Agentic systems do not create exposure through failure. They create exposure when success outpaces oversight.

The opportunity, however, is substantial. Well-designed agentic workflows reduce manual drag, surface meaningful signal earlier in the lifecycle, and preserve human judgment for decisions that matter most. By embedding traceability, auditability, and policy enforcement directly into operational workflows, organizations create durable institutional assets – documented reasoning, consistent standards, and reusable analysis that withstand turnover and regulatory scrutiny.

This is how legacy organizations scale responsibly without eroding trust or sacrificing control.

Elevator Pitch

We are not automating judgment. We are structuring production pipelines where agents ingest, analyze, monitor, and validate under explicit policy constraints, while humans remain accountable for consequential decisions. The objective is scalable output with embedded governance, not speed for its own sake.