How disciplined adoption, ethical guardrails, and human accountability turn agentic systems into usable tools

Agentic AI does not fail in legacy organizations because the technology is immature. It fails when theory outruns practice. Large, credibility-driven institutions do not need sweeping reinvention or speculative autonomy. They need systems that fit into existing workflows, respect established governance, and improve decision-making without weakening accountability. The real work is not imagining what agents might do in the future, but proving what they can reliably do today – under constraint, under review, and under human ownership.

From Manual to Agentic: The New Protocols of Knowledge Work

Most legacy organizations already operate with deeply evolved protocols for managing risk. Research, analysis, review, and publication are intentionally separated. Authority is layered. Accountability is explicit. These structures exist because the cost of error is real.

Agentic AI introduces continuity across these steps. Context persists. Intent carries forward. Decisions can be staged rather than re-initiated. This continuity is powerful, but only when paired with restraint.

In practice, adoption follows a progression:

- Manual – Human-led execution with discrete software tools

- Assistive – Agents surface signals, summaries, and anomalies

- Supervised – Agents execute bounded tasks with explicit review

- Conditional autonomy – Agents act independently within strict policy and audit constraints

Legacy organizations that succeed treat these stages as earned, not assumed. Capability expands only when trust has already been established.

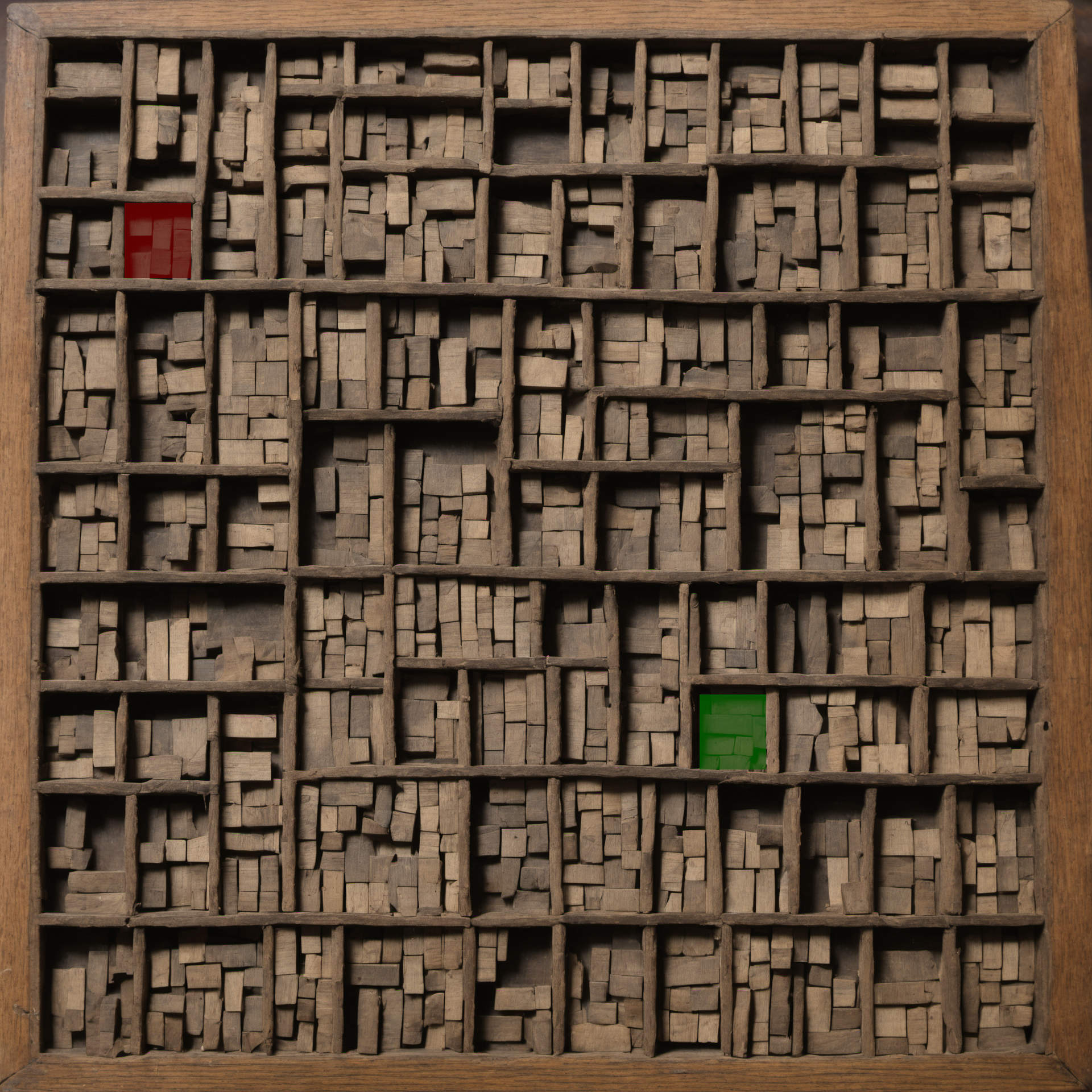

Metaphor: The Enterprise Desk

How Agentic Roles Interact

A useful way to understand agentic systems is to compare them to a well-run enterprise desk.

Information is gathered, not assumed. Analysis is performed, not published. Risk is evaluated, not ignored. Final decisions are made by accountable humans who understand the consequences.

An agentic pipeline mirrors this structure. Each agent has a narrow mandate. No agent controls the full flow. Authority is distributed, logged, and reversible. Outputs emerge from interaction rather than a single opaque decision point.

This alignment is not cosmetic. It is what allows agentic systems to be introduced without breaking institutional muscle memory.

Visual Media: Where Restraint Becomes Non-Negotiable

Textual workflows benefit from established norms of review and correction. Visual media does not. Images and video carry implied authority, even when labeled. Errors propagate faster and linger longer.

For this reason, ethical image and video generation cannot be treated as a creative convenience. It must be governed as a controlled capability. Generation should be conditional. Provenance must be explicit. Review must be unavoidable.

In many cases, the correct agentic action is refusal or escalation, not output. The value of an agentic system is not that it can generate, but that it knows when it should not.

Why This Matters to Senior Leadership

For leaders responsible for credibility, the primary risk of agentic AI is not technical failure. It is ungoverned success. Systems that move faster than oversight can absorb create exposure that compounds quietly.

The opportunity, however, is substantial. Well-designed agentic workflows reduce manual drag, surface meaningful signal earlier, and preserve human judgment for decisions that actually matter. They also create durable institutional assets – documented reasoning, consistent standards, and reusable analysis that survives turnover and scrutiny.

This is how legacy organizations scale without eroding trust.

Elevator Pitch (Agentic Workflows):

We are not automating decisions. We are structuring workflows where agents gather, analyze, and validate information under clear rules, while humans remain accountable for every consequential call. The goal is reliability, clarity, and trust – not speed for its own sake.”

Agentic AI will not transform legacy organizations through ambition alone. It will do so through discipline. The institutions that succeed will not be the ones that adopt the most autonomy the fastest. They will be the ones that prove, step by step, what agents can do responsibly today. Less theory. More practice. And accountability at every turn.